Meta has printed its newest overview of content material violations, hacking makes an attempt, and feed engagement, which incorporates the common array of stats and notes on what individuals are seeing on Fb, what individuals are reporting, and what’s getting essentially the most consideration at any time.

For instance, the Extensively Seen content material report for This fall 2024 contains the same old gems, like this:

Lower than superior information for publishers, with 97.9% of the views of Fb posts within the U.S. throughout This fall 2024 not together with a hyperlink to a supply exterior of Fb itself.

That proportion has steadily crept up over the past 4 years, with Meta’s first Extensively Seen Content material report, printed for Q3 2021, exhibiting that 86.5% of the posts proven in feeds didn’t embody a hyperlink exterior the app.

It’s now radically excessive, that means that getting an natural referral from Fb is tougher than ever, whereas Meta’s additionally de-prioritized hyperlinks as a part of its effort to maneuver away from information content material. Which it could or could not change once more now that it’s seeking to permit extra political dialogue to return to its apps. However the knowledge, no less than proper now, reveals that it’s nonetheless a reasonably link-averse surroundings.

If you happen to have been questioning why your Fb visitors has dried up, this could be a giant half.

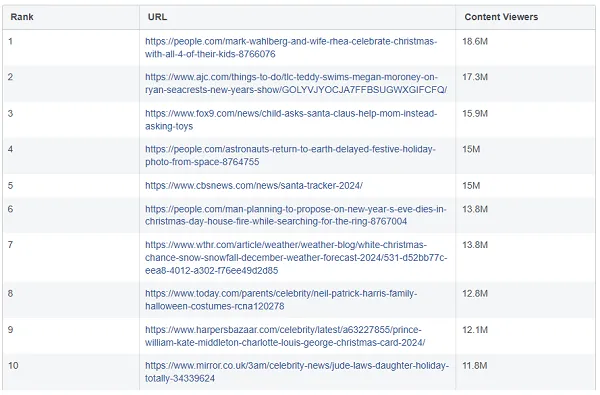

The highest ten most seen hyperlinks in This fall additionally present the common array of random junk that’s by some means resonated with the Fb crowd.

Astronauts celebrating Christmas, Mark Wahlberg posted an image of his household for Christmas, Neil Patrick Harris sang a Christmas music. You get the concept, the same old vary of grocery store tabloid headlines now dominate Fb dialogue, together with syrupy tales of seasonal sentiment.

Like: “Baby Asks Santa Claus to Assist Mother As an alternative of Asking For Toys”.

Candy, certain, but in addition, ugh.

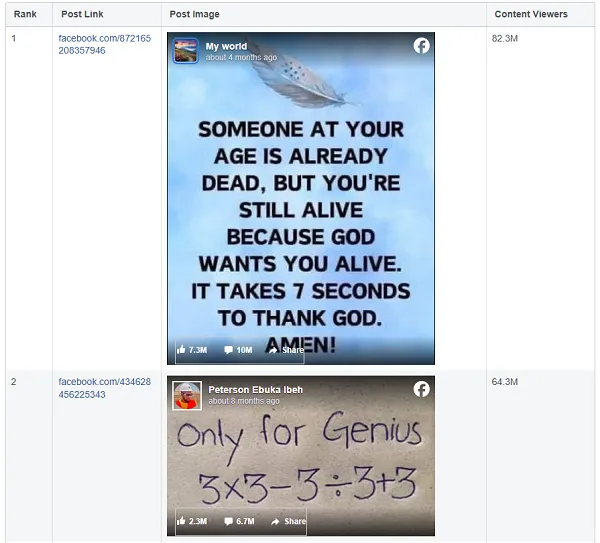

The highest most shared posts general aren’t a lot better.

If you wish to resonate on Fb, you in all probability might take notes from superstar magazines, because it’s that sort of fabric which seemingly good points traction, whereas shows of advantage or “intelligence” nonetheless catch on within the app.

Make of that what you’ll.

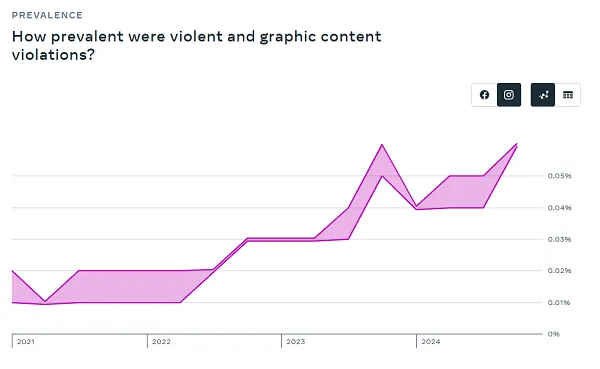

When it comes to rule violations, there weren’t any significantly notable spikes within the interval, although Meta did report a rise within the prevalence of Violent & Graphic Content material on Instagram because of changes to its “proactive detection know-how.”

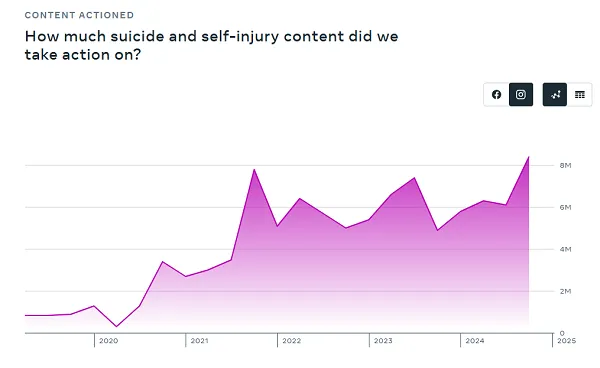

This additionally looks like a priority:

Additionally value noting, Meta says that faux accounts “represented roughly 3% of our worldwide month-to-month lively customers (MAU) on Fb throughout This fall 2024.”

That’s solely notable as a result of Meta often pegs this quantity at 5%, which has seemingly change into the business commonplace, as there’s no actual strategy to precisely decide this determine. However now Meta’s revised it down, which might imply that it’s extra assured in its detection processes. Or it’s simply modified the bottom determine.

Meta additionally shared this fascinating observe:

“This report is for This fall 2024, and doesn’t embody any knowledge associated to coverage or enforcement adjustments made in January 2025. Nonetheless, we’ve got been monitoring these adjustments and to this point we’ve got not seen any significant impression on prevalence of violating content material regardless of not proactively eradicating sure content material. As well as, we’ve got seen enforcement errors have measurably decreased with this new strategy.”

That change is Meta’s controversial change to a Group Notes mannequin, whereas eradicating third get together fact-checking, whereas Meta’s additionally revised some its insurance policies, significantly regarding hate speech, transferring them extra into line, seemingly, with what the Trump Administration would favor.

Meta says that it’s seen so main shifts in violative content material in consequence, no less than not but, however it’s banning fewer accounts by mistake.

Which sounds good, proper? Sounds just like the change is healthier already.

Proper?

Nicely, it in all probability doesn’t imply a lot.

The truth that Meta is seeing fewer enforcement errors makes good sense, because it’s going to be enacting loads much less enforcement general, so in fact, mistaken enforcement will inevitably lower. However that’s not likely the query, the actual challenge is whether or not rightful enforcement actions stay regular because it shifts to a much less supervisory mannequin, with extra leeway on sure speech.

As such, the assertion right here appears kind of pointless at this stage, and extra of a blind retort to those that’ve criticized the change.

When it comes to risk exercise, Meta detected a number of small-scale operations in This fall, originating from Benin, Ghana, and China.

Although probably extra notable was this explainer in Meta’s overview of a Russian-based affect operation referred to as “Doppleganger”, which it’s been monitoring for a number of years:

“Beginning in mid-November, the operators paused concentrating on of the U.S., Ukraine and Poland on our apps. It’s nonetheless centered on Germany, France, and Israel with some remoted makes an attempt to focus on folks in different international locations. Primarily based on open supply reporting, it seems that Doppelganger has not made this identical shift on different platforms.”

Evidently after the U.S. election, Russian affect operations stopped being as occupied with influencing sentiment within the U.S. and Ukraine. Looks like an fascinating shift.

You may learn all of Meta’s newest enforcement and engagement knowledge factors in its Transparency Heart, should you’re seeking to get a greater understanding of what’s resonating on Fb, and the shifts in its security efforts.