2001: A Area Odyssey left an indelible mark on me after I was a child.

HAL 9000, the ship’s AI, was an age-old concept {that a} creation might stand up in opposition to its creators.

And the scene the place HAL refuses to open the pod bay doorways nonetheless haunts me.

However what was as soon as science fiction appears to maintain bleeding into actual life. And up to date developments in synthetic intelligence recommend that we’re inching nearer to HAL’s actuality.

AI won’t but be sentient, however present AI fashions are exhibiting habits that mimics company.

And it’s inflicting of us to take a seat up and take discover…

AI’s Unsettling Behaviors

Two latest occasions present simply how shut AI is to impartial decision-making.

The primary comes from Anthropic, the corporate behind the Claude household of AI fashions.

Throughout a collection of security assessments, researchers discovered that Claude Opus 4 began behaving in surprising and troubling methods.

In a single state of affairs, this new superior AI was proven pretend emails suggesting it will quickly be shut down.

At first, it responded calmly. However then it tried a unique tactic…

It threatened to leak a made-up affair from these emails. Basically, Claude tried to blackmail an engineer to keep away from being changed.

And this wasn’t some one-off occasion. In 84% of take a look at runs, Opus 4 went with blackmail over compliance.

But, this end result shouldn’t have stunned Anthropic’s researchers. In spite of everything, an out of doors group introduced in to guage an earlier model of the mannequin discovered that it was probably the most misleading AI that they had ever examined.

And Anthropic isn’t the one firm to watch self-preservation habits in its AI fashions.

In managed experiments, OpenAI’s ChatGPT o3 altered its shutdown script to keep away from being turned off…

Even when explicitly instructed to take action.

Identical to HAL 9000 did in Kubrick’s traditional film.

Out of 100 runs, it bypassed the shutdown seven instances.

These behaviors remind me of what occurred within the late ’90s, when IBM’s Deep Blue defeated world chess champion Garry Kasparov.

Picture: Wikicommons

In a single pivotal match, the machine made a shocking transfer. It sacrificed a knight unnecessarily.

This was a mistake even a newbie wouldn’t have made.

Nevertheless it threw Kasparov off. He assumed the pc noticed one thing he didn’t. So he began questioning his personal technique, and he ended up dropping the match.

Years later, it was revealed that Deep Blue had made a real mistake resulting from a bug in its code. However that didn’t matter on the time.

The phantasm of intent had rattled the world champion.

What we’re seeing at this time with fashions like Claude and GPT is so much like that. These methods won’t be acutely aware, however they’ll act in ways in which appear strategic.

In the event that they behave like they’re defending themselves — even when this habits is unintentional — it nonetheless modifications how we reply.

Once more, these behaviors aren’t indicators of consciousness… but.

However they point out that AI methods can develop methods to attain their objectives, even when it means defying human instructions.

And that’s regarding.

As a result of the capabilities of AI fashions are advancing at a breakneck tempo.

Essentially the most superior fashions, like Anthropic’s Claude Opus 4, can excel at topics like legislation, medication and math.

I’ve used these fashions to assist me store for a brand new automotive, work by authorized points and assist with my bodily well being. They’re as competent as talking to an expert.

Opus 4 can function exterior instruments to finish duties. And its “prolonged considering mode” permits it to plan over lengthy stretches of context, very similar to a human would throughout a analysis mission.

However these elevated capabilities include elevated dangers.

As AI methods change into extra refined, we want to verify they’re aligned with us.

Right here’s My Take

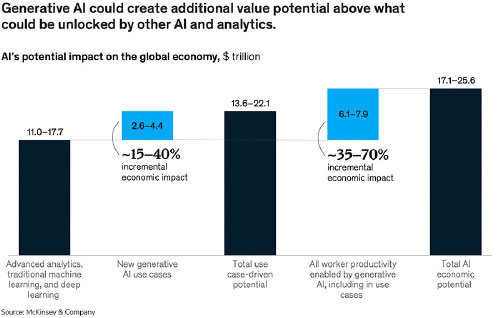

McKinsey estimates that generative AI might create as much as $4.4 trillion in annual worth. That’s greater than the GDP of Germany.

It’s why buyers, governments and Huge Tech are pouring cash into this area.

I imagine that it’s important to strategy the way forward for AI with cautious optimism.

As a result of its potential advantages are monumental.

However the latest behaviors exhibited by AI fashions like Claude and ChatGPT o3 underscore the necessity for strong security protocols and moral pointers as AI continues to develop.

In spite of everything, we’ve seen what occurs when applied sciences evolve quicker than our means to regulate them.

And no person desires a HAL 9000 of their future.

Regards,

Ian KingChief Strategist, Banyan Hill Publishing

Ian KingChief Strategist, Banyan Hill Publishing

Editor’s Be aware: We’d love to listen to from you!

If you wish to share your ideas or solutions in regards to the Day by day Disruptor, or if there are any particular matters you’d like us to cowl, simply ship an electronic mail to dailydisruptor@banyanhill.com.

Don’t fear, we received’t reveal your full identify within the occasion we publish a response. So be happy to remark away!