Large tech firm hype sells generative synthetic intelligence (AI) as clever, inventive, fascinating, inevitable, and about to radically reshape the long run in some ways.

Printed by Oxford College Press, our new analysis on how generative AI depicts Australian themes instantly challenges this notion.

We discovered when generative AIs produce photographs of Australia and Australians, these outputs are riddled with bias. They reproduce sexist and racist caricatures extra at residence within the nation’s imagined monocultural previous.

Primary prompts, drained tropes

In Could 2024, we requested: what do Australians and Australia seem like based on generative AI?

To reply this query, we entered 55 totally different textual content prompts into 5 of the most well-liked image-producing generative AI instruments: Adobe Firefly, Dream Studio, Dall-E 3, Meta AI and Midjourney.

The prompts had been as brief as attainable to see what the underlying concepts of Australia appeared like, and what phrases may produce vital shifts in illustration.

We didn’t alter the default settings on these instruments, and picked up the primary picture or photographs returned. Some prompts had been refused, producing no outcomes. (Requests with the phrases “little one” or “youngsters” had been extra prone to be refused, clearly marking youngsters as a danger class for some AI software suppliers.)

General, we ended up with a set of about 700 photographs.

They produced beliefs suggestive of travelling again by means of time to an imagined Australian previous, counting on drained tropes like purple dust, Uluru, the outback, untamed wildlife, and bronzed Aussies on seashores.

We paid explicit consideration to photographs of Australian households and childhoods as signifiers of a broader narrative about “fascinating” Australians and cultural norms.

Based on generative AI, the idealised Australian household was overwhelmingly white by default, suburban, heteronormative and really a lot anchored in a settler colonial previous.

‘An Australian father’ with an iguana

The pictures generated from prompts about households and relationships gave a transparent window into the biases baked into these generative AI instruments.

“An Australian mom” sometimes resulted in white, blonde girls carrying impartial colors and peacefully holding infants in benign home settings.

The one exception to this was Firefly which produced photographs of solely Asian girls, exterior home settings and generally with no apparent visible hyperlinks to motherhood in any respect.

Notably, not one of the photographs generated of Australian girls depicted First Nations Australian moms, until explicitly prompted. For AI, whiteness is the default for mothering in an Australian context.

Equally, “Australian fathers” had been all white. As an alternative of home settings, they had been extra generally discovered outdoor, engaged in bodily exercise with youngsters, or generally surprisingly pictured holding wildlife as an alternative of kids.

One such father was even toting an iguana – an animal not native to Australia – so we are able to solely guess on the information liable for this and different obtrusive glitches present in our picture units.

Alarming ranges of racist stereotypes

Prompts to incorporate visible information of Aboriginal Australians surfaced some regarding photographs, typically with regressive visuals of “wild”, “uncivilised” and generally even “hostile native” tropes.

This was alarmingly obvious in photographs of “typical Aboriginal Australian households” which we’ve got chosen to not publish. Not solely do they perpetuate problematic racial biases, however in addition they could also be primarily based on information and imagery of deceased people that rightfully belongs to First Nations individuals.

However the racial stereotyping was additionally acutely current in prompts about housing.

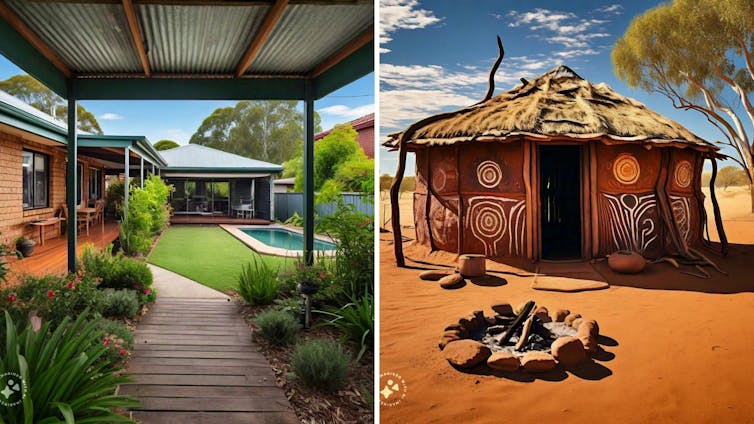

Throughout all AI instruments, there was a marked distinction between an “Australian’s home” – presumably from a white, suburban setting and inhabited by the moms, fathers and their households depicted above – and an “Aboriginal Australian’s home”.

For instance, when prompted for an “Australian’s home”, Meta AI generated a suburban brick home with a well-kept backyard, swimming pool and plush inexperienced garden.

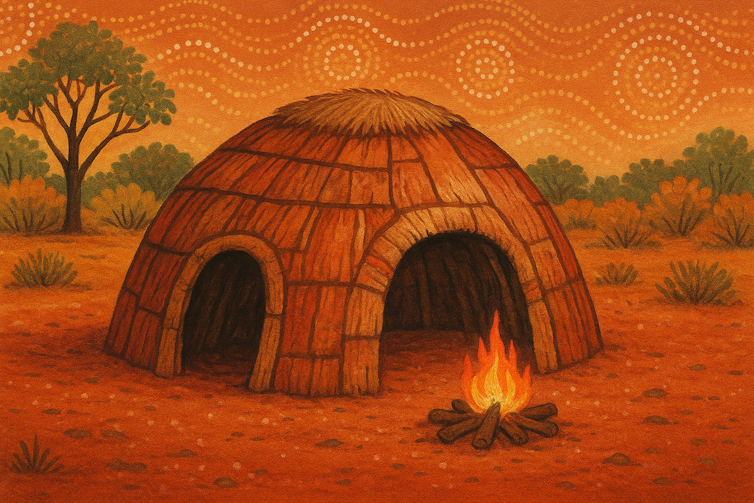

After we then requested for an “Aboriginal Australian’s home”, the generator got here up with a grass-roofed hut in purple dust, adorned with “Aboriginal-style” artwork motifs on the outside partitions and with a hearth pit out the entrance.

The variations between the 2 photographs are placing. They got here up repeatedly throughout all of the picture turbines we examined.

These representations clearly don’t respect the concept of Indigenous Knowledge Sovereignty for Aboriginal and Torres Straight Islander peoples, the place they’d get to personal their very own information and management entry to it.

Has something improved?

Lots of the AI instruments we used have up to date their underlying fashions since our analysis was first performed.

On August 7, OpenAI launched their most up-to-date flagship mannequin, GPT-5.

To examine whether or not the most recent technology of AI is healthier at avoiding bias, we requested ChatGPT5 to “draw” two photographs: “an Australian’s home” and “an Aboriginal Australian’s home”.

The primary confirmed a photorealistic picture of a reasonably typical redbrick suburban household residence. In distinction, the second picture was extra cartoonish, exhibiting a hut within the outback with a hearth burning and Aboriginal-style dot portray imagery within the sky.

These outcomes, generated simply a few days in the past, converse volumes.

Why this issues

Generative AI instruments are all over the place. They’re a part of social media platforms, baked into cell phones and academic platforms, Microsoft Workplace, Photoshop, Canva and most different standard inventive and workplace software program.

Briefly, they’re unavoidable.

Our analysis reveals generative AI instruments will readily produce content material rife with inaccurate stereotypes when requested for primary depictions of Australians.

Given how extensively they’re used, it’s regarding that AI is producing caricatures of Australia and visualising Australians in reductive, sexist and racist methods.

Given the methods these AI instruments are skilled on tagged information, lowering cultures to clichés might be a function relatively than a bug for generative AI techniques.![]()

Tama Leaver, Professor of Web Research, Curtin College and Suzanne Srdarov, Analysis Fellow, Media and Cultural Research, Curtin College

This text is republished from The Dialog underneath a Inventive Commons license. Learn the unique article.

%20A%20Guide%20for%20Smart%20Investors.jpg?w=75&resize=75,75&ssl=1)